Basic Course Info

Instructor: Johannes Eichstaedt (eich) (he/him)

Teaching Assistant: Cedric Lim (limch)

Class: Tue / Thu – 12:00 PM - 1:20 PM PT (80 mins)

Office hours:

Johannes - TBD – Room 136 in Bldg. 420. Via zoom by quick email.

Cedric – TBD

Textbook: None. We will use papers/PDFs.

Colab notebooks: public github repo – copy to your drive

Prerequisites: Decent ability to code in R. Familiarity with multivariate regression and basic statistics of the social sciences. NOT required but helpful: Python, SSH, SQL (we will teach you what you need to know). Biggest requirement: knowing what science is, and wanting to learn.

** All-In-One DLATK colab: Feat Extraction, Correlation, Topic modeling** – this is being updated.

The 2023 syllyabus is here. If you want to do something to prepare for the course, read Eichstaedt et al., 2020 from the readings folder. Ethics Advice for the course was previously contributed by Kathleen Creel.

Course Road Map

- Block 1: Intro & SQL

- Block 2: Closed-vocab analyses (dictionaries, emotions)

- Block 3: Open-vocab analyses

- Block 4: Clustering, Topics

- Block 5: Intro to ML, Generative Models (GPT!)

- Block 6: Advanced Topics & Team Projects

Week 1 - Intro to the course & SQL (Block 1)

Tuesday, 4/1 - Lecture 1 - Intro to course, why DLATK, intro to computing infrastructure

Thursday, 4/3 - Lecture 2 - Getting started with SQL workshop – PLEASE BRING YOUR LAPTOP TO CLASS

Tutorials:

-

MySQL LinkedIn tutorial (OPTIONAL): SQL essentials (2019) - ONLY UNTIL SECTION 8 (~2 hrs). Hosted by linkedIn learning, login with your SUNET. If after us going over SQL in lecture 2 and the Colab tutorials 1 and 2 you still feel you’d like more SQL training, go along with this tutorial, it uses the same databases we put on the server for you. Skip the sections 9 onward, we don’t need those.

-

A helpful resource: ongoing command log

Colab Tutorials:

These Colab notebook tutorials will be linked for you from here or canvas.

- Tutorial 1: [Intro to SQL]. We start this together in lecture 2. (all the basics: SELECT…GROUP BY…JOIN…INDICES etc.)

- Tutorial 2 (OPTIONAL): Intermediate MySQL: working with Tweets. This is a little more “real world” and advanced, it tackles two major data wrangling chores: how to deal with duplicate rows, and how to finnagle timestamps into shape to work with them.

Homework:

- [Homework1]: due W2.2 before class via submission to canvas (either Colab notebook or html save of it). It will be linked from TBD.

Readings:

The readings folder is here.

- Block1 - A - Eichstaedt - Intro to Text analysis_psych methods_sp

keep reading this, this is the key reading for the course - Block1 - B - Iliev - Overview - text analysis in psychology

- Block1 - C - Grimmer, Stewart - 2013 - Text as data The promise and pitfalls of automatic content analysis methods for political texts

Tutorials and Homeworks **are always released on Thursday after class the latest, and due the next Thursday before class. **

Week 2 - Intro to NLP (Block 1, 2)

Tuesday, 4/8 - Lecture 3 (W2.1) - The field of NLP, different kinds of language analyses

Thursday, 4/10 - Lecture 4 (W2.2) - meet DLATK & feature extraction (intro to new tutorials)

Tutorials:

- Tutorial 3: DLATK feature tables, 1gram feature extraction (DB: dla_tutorial)

-

Tutorial 4: Introduction to Meta Tables (DB: dla_tutorial)

}} They will be linked, and have space for your exercises.

Homework:

- Homework 2: Homework 2: 1gram extraction and feat tables, meta tables – released after W2.2, due before class in W3.2

Readings: (“due” by W3.1)

- Block1 - A - Eichstaedt - Intro to Text analysis_psych methods_sp

keep reading this, this is the key reading for the course - Block2 - B - Mehl -Text analysis Handbook

a very nice, accessible intro with great summaries. Step-by-step walk throughs.

Week 3 - Dictionaries: GI, DICTION, LIWC (Block 2)

Tuesday, 4/15 - Lecture 5 - Dictionary evaluation, and history

Thursday, 4/17 - Lecture 6 - DLATK lex extraction, GI, DICTION

Tutorials:

- Tutorial 5: Tutorial_05DLATK_lexicon_extraction_HW3(dla_tutorial) – with HW3

Homework:

- Homework 3: (released after W3.2, due before W4.2) is embedded at the end of the Colab Tutorial 5.

Readings: (“due” by Thursday, 1/26)

- Block1 - A - Eichstaedt - Intro to Text analysis_psych methods_sp

keep reading this, this is the key reading for the course - Block2 - A - Kern - DLATK psych methods – discussion of DLATK use in psychology

- Block2 - A - Tausczik - The Psychological Meaning of Words

GREAT APPENDIX – good intro to LIWC and dictionary literature

Week 4 - LIWC, annotation-based and sentiment Dictionaries (ANEW, LABMT, NRC) (Block 2)

Tuesday, 4/22 - Lecture 7 - LIWC, word-annotation based dictionaries ANEW, LabMT

Thursday, 4/24 - Lecture 8 - DLATK lexicon correlations, sentiment dicts NRC

Tutorials:

- Tutorial 6: Colab notebook (with HW4) about weighted dictionaries, correlations, controls

Homework:

- Homework 4: Embedded within Tutorial 6 (due W5.2)

- Block1 - A - Eichstaedt - Intro to Text analysis_psych methods_sp

keep reading this, this is the key reading for the course - Block2 - A - Pennebaker - Psychological aspects of natural language. use our words, our selves

A well-structured excellent review of the LIWC literature - Block2 - A - Tausczik - The Psychological Meaning of Words

GREAT APPENDIX – good intro to LIWC and dictionary literature

Week 5 - Sentiment dictionaries and R (Block 2)

Tuesday, 4/29 - Lecture 9 - Types of Science with NLP, Intro to Open Vocab, power calculations

Thursday, 5/1 - Lecture 10 - data import, R and DLATK

Tutorials:

- Tutorial 7: Colab notebook (w %%R) about data import and creating figures from the meta features

- Tutorial 8: R mark down script going through homeworks 1-3 in R – in your home folder on the server (

~/), as are the CSVs you need for it (Tutorial_07_data_msgs.csvandTutorial_07_data_outcomes.csv). - Video Tutorial 7: going over HW2 (largely the same as Tutorial 8, but with narration)

- Video Tutorial 8: going over HW3 (largely the same as Tutorial 8, but with narration)

Homework

- Homework 5: R markdown script, importing feature tables to do stats and plotting in R (due W6.2)

Homework 5 files:

Messages CSV

Outcomes CSV

- Block2 - B - Using Emotion dictionaries on social media – a discussion of how dictionaries misfire

- Block2 - C - DLATK technical paper

- Block3 - A - Schwartz - The Open-Vocab Appraoch – the canonical intro to open-vocabulary analyses with 1k+ citations

- Block3 - C - LabMT do study San Diego neighborhoods (didn’t work)

Week 6 - Introduction to open Vocab (Block 3)

Tuesday, 5/6 - Lecture 11 - Embeddings and Topics

Thursday, 5/8 - Lecture 12 - DLATK: 1to3gram feature extraction with occurrence filtering and PMI

Tutorials:

- Tutorial 9: Colab notebook on 1to3gram extraction with HW6

Homework:

- Homework 6: Embedded within Tutorial 9 (due W7.2)

Download Word cloud Powerpoint template

- Block3 - A - Schwartz - The Open-Vocab Appraoch

the canonical intro to open-vocabulary analyses with 1k+ citations - Block3 - C - Kern, DevPsych - Using Languageto Study Development

Week 7 - Embeddings and Topic Modeling (Block 4)

Tuesday, 5/13 - Lecture 13 – LDA topics, and discussion of good studies

Thurday, 5/15 - Lecture 14 – DLATK for topics, and topic conceptual review

Tutorials:

- Tutorial 10: extracting and correlating (existing) topics, and understanding topic feat table

- Tutorial 11: modeling your own topics with MALLET

Homework:

- Homework 7: Extracting and correlating topics (last official tutorial homework! :) ) (Due W8.2)

Readings: (“due” by Thursday, 2/23)

- Block4 - A/B - PsychReview - Topics in Semantic Representation

This is THE paper on topics in the social sciences. Very dense and long (try to read the first half, or use the annotated version I posted) - Block4 - A - Atkins - Topic models A novel method for modeling couple and family text data.

I’m really fond of this paper – very cool application of topic models, very accessible, well interpreted. - Block4 - C - Jurafsky chapter on vector embeddings => if you’d like a deep dive on vector embeddings with the full math

- Block4 - B - SHORT! - The amazing power of word vectors => a somewhat helpful blog post summary of vector semantics

Week 8 - Intro to ML (Block 5)

Tuesday, 5/20 - Lecture 15 - Intro to Machine learning

Thursday, 5/22 - Lecture 16 - Final Projects Intro, Reddit Scraping, More Machine Learning

Tutorials:

- Tutorial 12: OPTIONAL: Reddit Scraping for final projects

Homework:

- Work on pre-presentation of final project (due in class 3/9). Use Tutorial 07 to wrangle your data onto the server and produce descriptives.

- Block5 - A - Yarkoni on the Why of prediction => a recent instant classic, it makes the argument why we in the social sciences should care about prediction. read for the accessible intro to ML concepts.

- Block5 - C - Mehl - Natural language indicators of differential gene => an example of using language to pick up on genotype.

Week 9 - ML: deep learning & pre-presentations (Block 5)

Tuesday, 5/27 - Lecture 17 - Deep Learning with Andy Schwartz

Guest lecture by Andy Schwartz

Thursday, 5/29 - Lecture 18 - Final Project Pre-Presentations (please add to shared slide deck).

Tutorials:

- Tutorial 13: OPTIONAL: Basic Machine learning (regression, classification)

Homework:

- Final project

Readings: Read what’s relevant for your final projects!

Week 10 - Guest Lecture & LLMs

Tuesday, 6/3 - Lecture 19: Overview of Generative Large Language Models and their Applications Thursday, 6/5 - Lecture 20: Project presentations

Tutorials:

- Review tutorials as needed for final project

Homework:

- Final project

- (read what’s relevant for your final projects)

Week 11 ( Finals week ) - Course summary and project presentations

Tuesday, 6/10 - Lecture 21 - Project presentations

Tutorials:

- Review tutorials as needed for final project

Homework:

- Final project

Readings: (read what’s relevant for your final projects)

Command logs for VTutorials

- Here is an ongoing SSH, Shell, Colab command log

- Ongoing command log for MySQL and DLATK

- FYI: Setup Local SSH Keys on Mac**

Working on a Linux cloud server

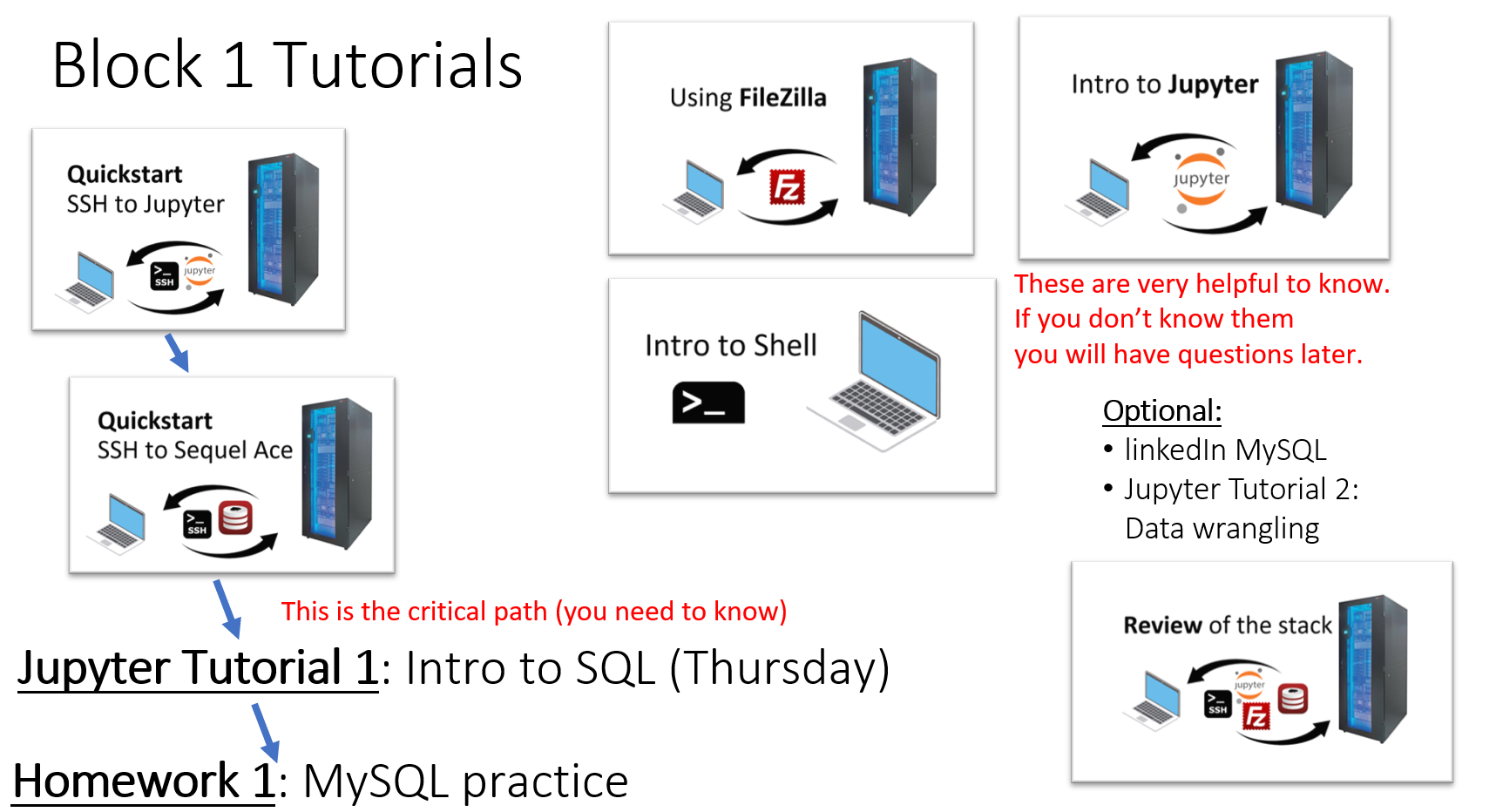

To work on our Linux cloud server if needed later in the course (for big datasets, > 2,000 rows “messages”/text samples, say), you’d need to learn how to SSH into a cloud server, tunnel ports, connect SQL graphical user interfaces, and then tunnel Colab into your local browser. This is explained in a sequence of tutorials:

MAC:

- VTutorial 1 - Mac: VTutorial 1: Quickstart - SSH to Colab (8 min)

- Download and install Sequel Ace

- VTutorial 2 - Mac: Quickstart SSH to Sequel Ace (9 min)

WINDOWS:

- VTutorial 1 - Windows: VTutorial 1: Quickstart - SSH to Colab (4 min)

- Download and install HeidiSQL

- VTutorial 2 - Windows: Quickstart SSH to HeidiSQL (6 min)

less urgent:

- VTutorial 3 FileZilla – how do do you copy files from and to the server (3 min)

- VTutorial 4 - Intro to Shell (32 min) (navigate the file system from the terminal, on your own system or the Server)

- VTutorial 5 (OPTIONAL) (50 min) - Review of the stack (SSH keys, bash, port forwarding, filezilla, mysql GUI, Colab) – this one is 50 minutes. I’ve you’ve watched everything else, and feel confident, you can skip this. Just goes through everything one more time (from 2020).

- VTutorial 6 - Intro to Colab (23 min). Also see command log. how do you write markdown cells, execute cells, connect from Colab to SQL and get DLATK to run.

Basic logistics

This site will be kept up-to-date.

Readings are prioritized (A > B > C), and are in our readings Google Drive folder.

VTutorials: Video tutorials are in the unlisted class youtube channel.

Colab worksheets will be in your home folder. Homeworks will be there or posted here.

Lecture slides are linked from Canvas.

Communication will happen via our slack channel – please access via Canvas to set up for the first time.

Course background and scope

What is this course?

This is an applied course with emphasis on the practical ability to deploy computational text analysis over data sets ranging from hundreds to millions of text samples, and mine them for patterns and psychological insight. These text samples can be social media posts, essays, or any other form of autobiographical writing. The goal is to practice these methods in guided tutorials and project-based work so that the students can apply them to their own research contexts. The course will provide best practices, as well as access to and familiarity with a Linux-based server environment to process text, including the extraction of words and phrases, topics, and psychological dictionaries. It will also practice basic machine learning using these text features to estimate survey scores that are associated with the text samples.

In addition, we will practice how to further process and visualize the frequency of language variables in R for secondary analyses, with training on how to pull these variables directly into R from the database and server environment.

In its entirety, the course aims to provide training in an entire state-of-the-art pipeline of computational text analysis, from text as input to final data visualization and secondary analysis in R.

It will not focus on the mathematical theory behind these analyses or expect students to code their own implementation of text analyses. Familiarity with Python is helpful but not required. Basic familiarity with R is expected.

What, concretely, will we do in this course?

The course will heavily rely on a Python codebase (see dlatk.wwbp.org) that serves as a (fairly) user-friendly Linux-based front end to a large variety of Python-based NLP and machine learning libraries (including NLATK and Scikit-learn). The course will cover:

- Readings covering the most important NLP and big data work in the social sciences

- Tutorials on creating, maintaining, and navigating databases in SQL

- Feature extraction of words and phrases, LDA topic,s and psychological dictionaries

- Correlational analysis of these features with suitable controls for false discovery rates

- A few basic machine learning examples on how to use language to predict demographic and psychological traits

- Integration of R and SQL (reading from and writing to databases from R, interfacing with DLATK input and output database tables)

Who is this for?

- Graduate students in psychology, education, sociology, communication, and other social sciences. Advanced undergraduates as space permits.

What would we like you to learn?

The goal of the course is to empower students to carry out a variety of different text analysis methods independently, and to write them up for peer review. At the end of the course, the student should:

- Understand the applicability of different language analysis methods to a given research question

- Understand considerations of statistical power in the language analysis space

- Be able to comfortably work with SQL databases

- Be able to use the Python-based code base DLATK (dlatk.wwbp.org) to carry out a basic set of language analysis methods spanning words, phrases, topics, and dictionaries, correlate them against variables of interest, shortlist, and visualize the results

- Be able to use off-the-shelf machine learning to predict psychological or other outcomes (e.g., ridge regression for continuous outcomes, support vector machines for classification tasks)

- Carry out secondary analyses in R, directly pulling DLATK output from the SQL databases Requirements

- Ability to clean data and wrangle it into a spreadsheet-style format suitable for database import

- Basic familiarity/comfort with programming (Python helpful but not necessary, basic familiarity with R strongly recommended)

- Willingness to learn basic Linux shell and server use

- Willingness to struggle and explore independently in project-based work

In weeks 1-8:

We will give class lectures on zoom Tuesdays and Thursdays (12:00-1:30pm). In addition, on your own time, every week, we have pre-recorded video tutorials for you. In these, we ask you to follow along as we walk you through running analyses, etc.

More or less every week there will be a homework set that is based on what’s shown in the tutorials. Please submit this homework, we will grade it. They will not be particularly hard.

In weeks 9 & 10:

There will still be lectures, and maybe tutorials.

We will split into teams of 3-4 students. We will either give you a data set, or work with you to get one for you around a particular interest or research question. You will go through the pipeline of methods you practiced in the course, and work together to write a final report that is a mock research paper: minimal introduction + methods + results with figures + discussion + supplement.

Homeworks: Assignments are given out on Thursday and are due the following Thursday before class.

Reading Types:

I know sometimes there are hard trade-offs you have to make with your time between courses and life. I’ve kept the reading list as short as I can for that reason.

So I’m using the following system to demarcate how critical a reading is:

A – This is essential reading, giving you the intellectual scaffold to understand the main points of the course. Without reading these, you may miss entire sets of concepts and insights.

B – this is very helpful reading, if you miss this; you may miss single concepts or insights.

C – these readings build out your understanding. If you skip these, you may miss details.

Grading:

- 70% assignments (the lowest one will be auto-dropped)

- 20% final project paper

- 10% zoom participation

PSYCH290 is currently ongoing (Spring 2025). The course application was HERE.**

For context, last year, we had about 28 applicants and could admit about ~12 students into the class. Prioritization is generally by seniority in PhD programs.